Bill Gates Tells Oprah About Edtech’s Past, Not Its Future

Personalized learning: this time it’ll be different.

Last week, in a nationally televised prime-time special, Oprah Winfrey interviewed a panel of experts on the implications of AI on our society. When it came to understanding the implications of AI on education, she turned to Bill Gates. “What does the world look like if you get the best of AI?”, she asked.

Gates responded:

In classrooms across the United States, artificial intelligence allows students to move at their own pace and zero in on the skills they most need to improve.

Hang on. Wait. That was what Bill Gates said about “personalized learning software” in 2017, not “artificial intelligence.” Let me collect my notes. Here is what he told Oprah.

One approach that I’m excited about is called personalized learning: combining artificial intelligence, project-based learning, and traditional classroom work to let students move at their own pace, which frees up teachers to spend more time with whoever needs more personal attention.

Sorry, I smudged it. That was Gates in 2016 on “digital tools” not “artificial intelligence.” Let me try again with his response to Oprah:

What makes great teachers great is their ability to approximate this personalized learning experience in a classroom with 30 students. But it is very hard. Not all students learn in the same way. However, I believe artificial intelligence can help teachers come much closer to approximating this one-on-one, personalized teaching style.

I’m sorry, I obviously need an editor. That was Gates in 2013 talking about “adaptive learning software,” not “artificial intelligence.” Here he is with Oprah for 100% real this time.

There are huge opportunities to create more engaging and interactive ways of learning, including artificial intelligence that gives students and teachers important real-time feedback.

My size-of-a-planet bad. That was “personalized learning” back in 2012, not “artificial intelligence.” Here is what Gates told Oprah for real in 2024 last week.

The dream that you could have a tutor who’s always available to you, and understands how to motivate you, what your level of knowledge is, this software should give us that. When you’re outside the classroom, that personal tutor is helping you out, encouraging you, and then your teacher, you know, talks to the personal tutor. “Okay, what’s your advice about how I do things in class the next day.” They won’t have to spend time grading homework. They’ll immediately know how that went. [..] But the fact that as the teacher is saying, okay what should you work on, it’s working at your level, that personalized notion, you know we can see it’s already working pretty well.

Look, I too think it would be very exciting if this time it was different. But Gates and so many others have been banging the personalized learning drum for well over a decade, all to underwhelming results. If we are meant to take their predictions for this new personalized learning technology seriously, I think they should seriously account for the underperformance of the old personalized learning technology first.

Update

2024 Dec 18. Gates, or his team, or whoever, has taken a couple of these pages down. Read into that whatever you will. I know I am.

BTW

Here is the whole deal, folks—the current sorry millenia of personalized learning.

Featured Comment

One of the many underlying assumptions for the chatbot-tutor heralds is that students will learn from these products as long as they use the products in exactly the right way. but if the usefulness of your tool depends on users contorting themselves to accommodate the idiosyncrasies and deficiencies of your tool... it's not actually useful.

Odds & Ends

¶ While Gates offers Oprah his predictions of education’s future, teachers are drowning now. In the third annual Merrimack College Teacher Survey, Education Week’s research arm found:

Only eighteen percent of teachers now say they are very satisfied with their jobs, down from 20 percent in 2023, but still higher than the 2022 rate of 12 percent.

When asked what would improve teacher well-being, the top five responses were:

A pay raise or bonus to reduce financial stress

More/better support for student discipline-related issues

Fewer administrative burdens associated with meetings and paperwork

Smaller class sizes

Permitting/encouraging mental wellness/health days

Maybe you can squint at #3 and build a savior role for AI around it. But you’d need to squint really hard, hard enough to ignore all the very obvious ways teachers need our help that have very little to do with software.

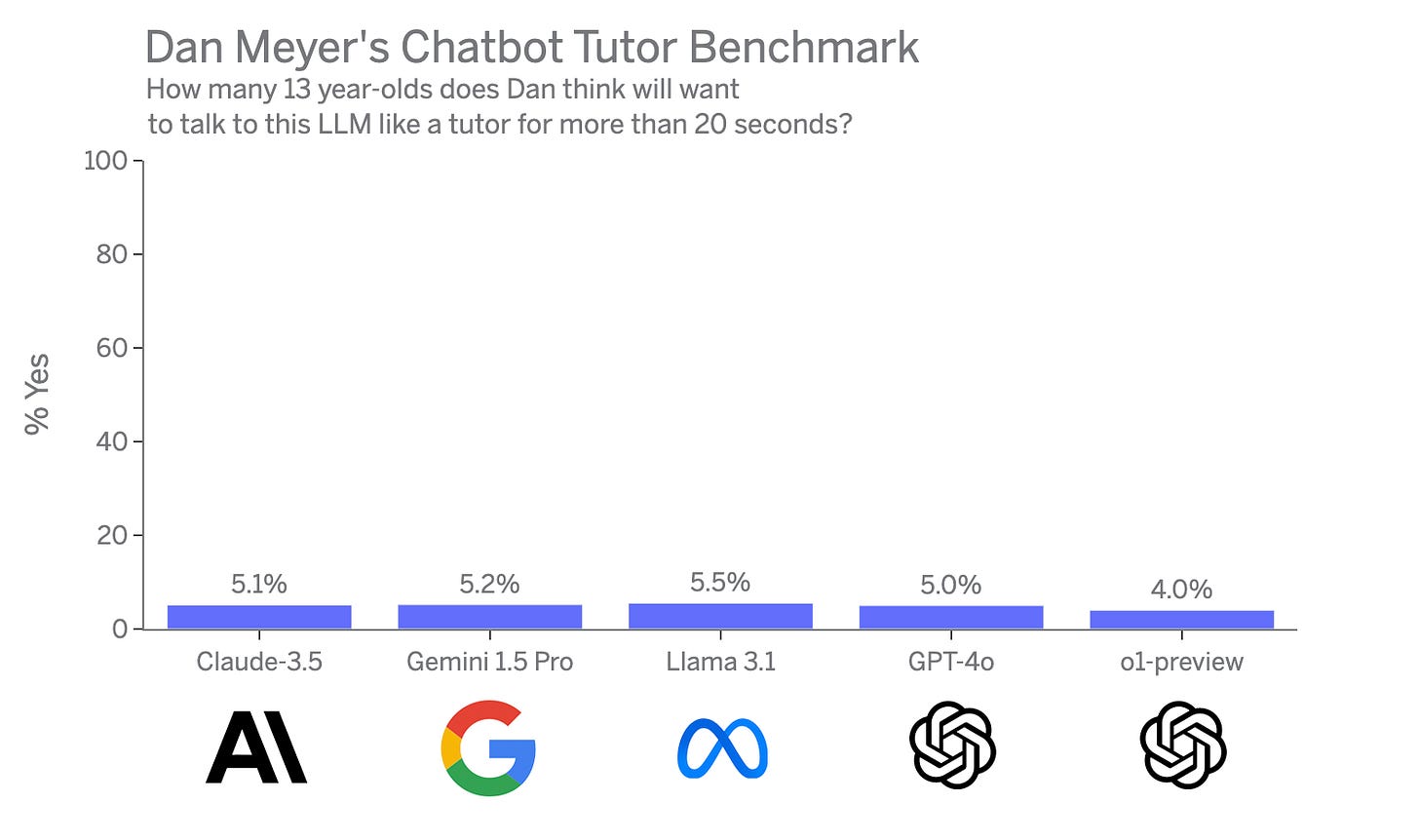

¶ Maybe you’ve heard that OpenAI released a new large language model last week. They test these models against standard benchmarks and I personally think it’s cool how they often get smarter. GPT-4o scored 13.4% on a competition math exam while this new model o1-preview scored 83.3%. Wow. There is, however, only one benchmark you need to think about when you’re evaluating these models for use as chatbot tutors, and you’ll only find it here.

The numbers are still dismal, folks. And unfortunately, the same changes that made o1-preview more effective for reasoning make it worse for chatting. Higher latency, longer wait times for responses—the kids I am surveying in my head are already opening up another tab.

¶ Stanford math education professor Jennifer Langer-Osuna brought some students and technologists together to ask the question, “What if we could design ways to use generative AI to help humans learn with… other humans?” The results are wacky and boundary-expanding in a very positive way. More low stakes experimentation like this IMO.

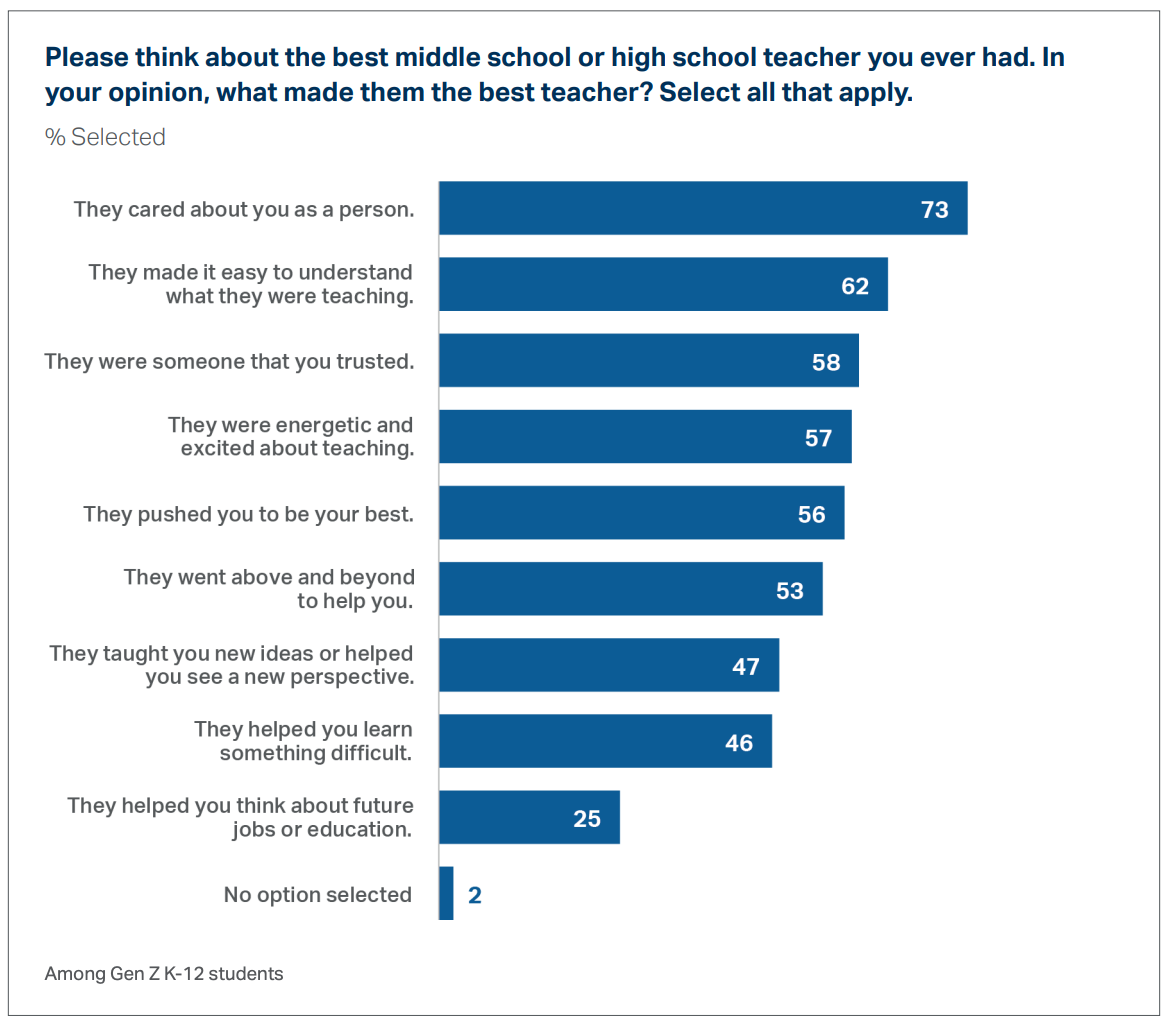

¶ The Gallup Voices of Gen Z Survey has lots of reasons for concern and a couple of reasons for cheer. I found the characteristics of students’ best teachers pretty interesting. Check out that jump from #2 to #1.

¶ Ed Tech Company AllHere, Once Lauded for Creating L.A. Schools’ $6M AI Chatbot, Files for Bankruptcy. It almost feels like rubbernecking at the scene of a car accident to post updates here, but it’s a very interesting story. Not an AI story, in my view, but a future edtech classic.

To follow up on the featured quote from Agasthya Shenoy:

What I've noticed with AI is that it is VERY sensitive to the specific wording of the prompt. So, if you are able to **exactly** articulate what you're looking for, the tools can be powerful. That works great for people who already have a strong foundation, and are just trying to resolve a small technical point. But often in the classroom struggling students have difficulty even formulating what they don't know, and no AI system is going to work well with a prompt like "I don't get it." One of the roles of good teachers is that they can pick up on student's weaknesses and figure out where they need help even when the student can't. I think ultimately AI will help those students who are already doing well, while not adding much value to those who are not. Unfortunately, that is counter to the whole premise of using AI in schools, which is to provide "personalized" tutoring to weaker students. So I'm skeptical that this is going to make much of a difference, and if it does it will probably just widen the achievement gap, not reduce it.

I appreciate your rigorous criticism of AI. I can't get beyond the weirdness of it all. Or I am missing the whole point.

With the data from communication of matters of current math, responses are generated. Seems this assures teaching/learning are constrained to a perpetual loop of contemporaneity. They can never get on a path to the world the technology behind it all is taking them.