Chatbots Have a Math Problem and a People Problem

A conversation with Sal Khan and a bunch of education foundation reps.

Last week, I was invited to chat behind closed doors with a working group on generative AI consisting of a bunch of representatives from education foundations. This group has money to pass around and is wondering, “Should we pass this money around to generative AI projects? How much and which kinds?”

I was invited to offer my perspective on classroom applications of generative AI. My perspective, as you may know, is more pessimistic than the median education technologist’s and certainly more pessimistic than the other guest this group brought in for the conversation: Sal Khan. Whereas I predict some modest quality-of-life improvements for teachers and students over the next five years, Khan has claimed that AI tools like his Khanmigo represent “the biggest positive transformation that education has ever seen.”

I gave some remarks to introduce my position. Khan did the same, and then there was some moderated Q&A. I thought I’d share my prepared remarks with you all.

My opinion on generative AI in education is unusual in the discourse. In the middle of two factions—one saying this will disrupt education negatively and another saying this will transform education positively—I am here to offer a different perspective about generative AI, about chatbots in particular, a brave perspective which is that:

It’s neat. 👍

Depending on where you fall in the discourse, you might hear some subtext underneath “it’s neat.” I want to tell you that you are not wrong. There is subtext and I want to bring it up to the text.

To the pessimists, when I say, “It’s neat,” what I mean is, “It will be fine.” ELA teachers will undergo a bunch of soul-searching. They’ll have to re-think assessment and assignments, for example, but math did this with graphing calculators and Wolfram Alpha and Photomath. You will find your footing again.

And to the optimists—the operators, funders, prognosticators, newly minted genAI experts and consultants who sent the hype cycle skyrocketing Q1 and Q2 this year—when I say, “It’s neat,” what I am saying is “It’s just neat.”

What I am saying is “I don’t think you understand the assignment.” Or at least we understand the assignment very differently.

The Assignment

The assignment is to take knowledge that the brightest adults in our world developed over thousands of years, and help kids learn it in twelve, all while they’re beset by acne and hormones and body odor and social media, all within a social and economic system that provides fewer and fewer resources for working class kids, that provides instead higher and higher rates of child poverty, child homelessness, child malnutrition, incidences of personal and systemic racism, the incarceration of their parents, all while the answer to the question “what will happen if I work hard in school?” seems more likely to be “lifelong student debt and permanent entry-level wages” rather than “financial prosperity.”

That is the assignment, and you bring to me … a chatbot. A CHATBOT??

Look it’s neat … it’s neat like a kid showing up to a raging house fire in a firefighter’s suit telling the crew “I got this!”

Like neat, I love your hustle, but I gotta … we gotta … look: we have a lot of work to do here okay?

Chatbots struggle enormously with that work. They struggle in two particular ways.

Chatbots have a math problem and a people problem.

Chatbots have a math problem.

Chatbots have a math problem, which is that they only speak a particular dialect of mathematics, one that students who are learning math do not easily speak.

When you try to help students learn something new, that knowledge is not formed or expressed particularly well. Kids communicate in gestures, sketches, scribbles, murmurs, ums, and ahs. They overgeneralize earlier ideas, taking them well past their “use by” date. Their train of thought derails and then gets back on track all in the same sentence.

To communicate with chatbots, students have to shape all of that thinking into a single expression of written or verbal text. They have to package it up and ship it to the chatbot. The chatbot then does something with it that I would describe as truly unprecedented and very neat and ships it back to the students who must then map the formal contents of that package back onto the informal dialect they speak.

That translation process represents a cognitive tax, one which students pay coming and going, one which they pay alone.

There are other ways that teachers, students, and devices can interact, ways that accommodate and even invite the informal mathematical dialect.

For example, in the activity Function Carnival from Amplify’s core math curriculum, we start by asking students to watch an animation, to say something they noticed, to draw the path of the cannon person in the air with their finger, to sketch it on a graph.

Noticings, gestures, sketches. We invite, accept, and develop all of them. Students need to be understood in their mathematical dialect and chatbots do not understand it.

Chatbots have a people problem.

Chatbots have a people problem which is that they have a problem with people.

Not chatbots themselves, which are relentlessly peppy and upbeat and I love that about them. Rather the chatbot theory of learning, which is just an upgraded version of the personalization theory of learning, has a problem with people. That problem is that it has no idea what to do with the people in the classroom.

Personalized learning says that, in a classroom with even this few students, there is far too much variability between learners for the teacher to manage, much less use. Their learning paths are so different as to be unrecognizable one to another. Personalized learning declares that these students are liabilities to each other, rather than assets. Therefore we must segregate the students from each other and provide them with a personalized set of resources, often delivered through a laptop and headphones.

This theory of learning has not worked relative to other models of schooling because, in this model, the teacher functions as second-tier customer support, essentially handling the student questions that the software could not, and teachers are good for much more than this.

This theory of learning has not worked, additionally, because students do not buy it. Students do not believe that their classmates are liabilities. Much more often students like their classmates and do not feel limited by them and would rather learn with them if the option were available.

And students especially do not believe that they themselves are liabilities to their classmates. No one wants to believe that about themselves.

Skilled teachers know how to work with this learner variability, creating moments of learning that are greater than the sum of what individual learners know.

For example, in the previous lesson, a teacher might identify two students with interesting graphs of the cannon person’s height off the ground, tell the class to stop what they’re doing and check the graphs out, to name what’s different and the same about them, to name what’s good about both, to make a third graph that includes the best features of each.

I invite you to wonder what students have learned about math and about themselves as learners in that kind of moment.

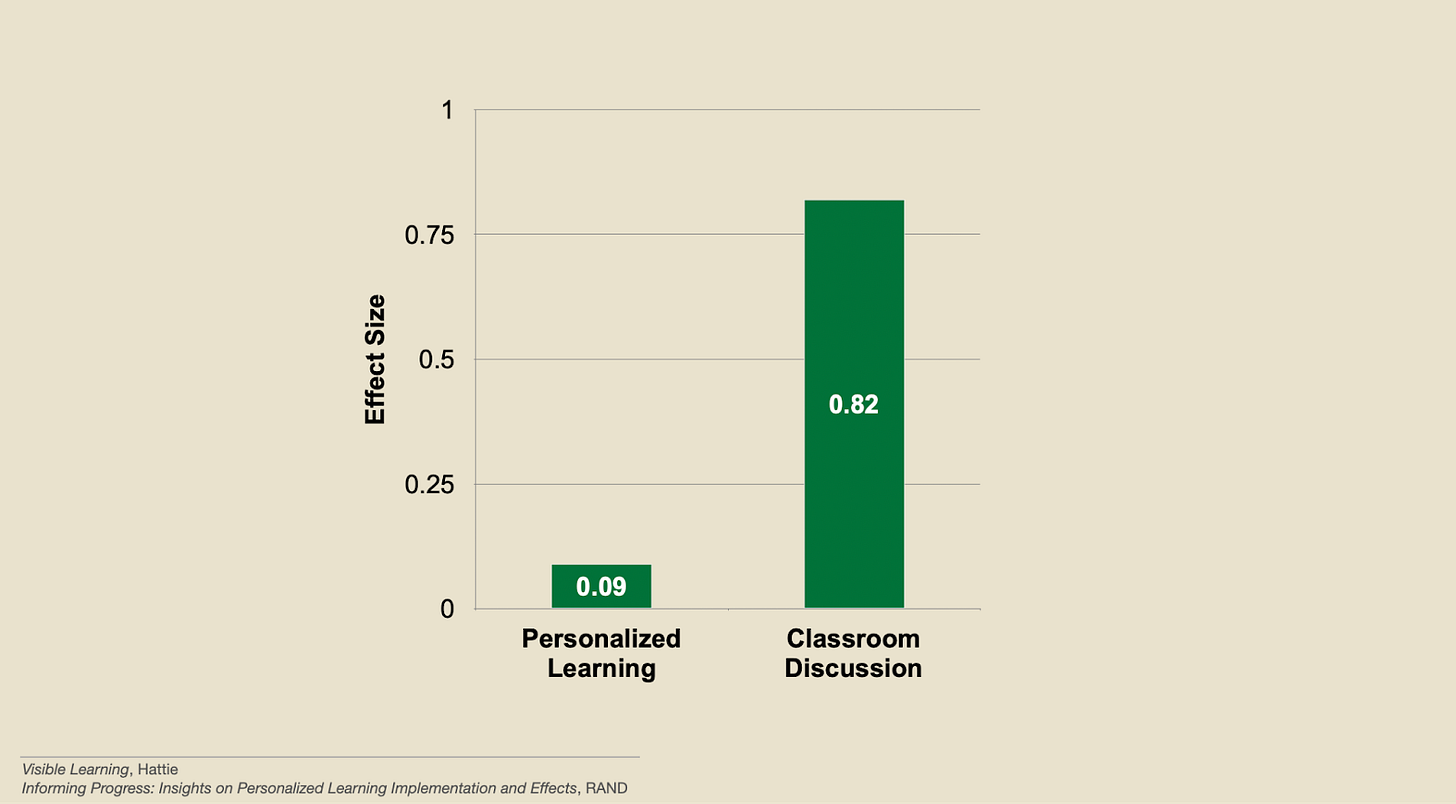

When I say the chatbot theory of learning has not worked, I mean the Gates Foundation funded a national study by RAND finding no significant results for the personalized learning cohort in reading and significant, if pretty modest, results in mathematics (p. 34). All while classroom discussion has an effect size, via Hattie, of many times the size of personalized learning.

All while RAND found the result of telling students that they and their classmates are liabilities to each other, rather than assets, was a decrease in feelings of belonging and community in the personalized learning condition (p. 24).

This is the legacy chatbots are poised to inherit.

Look - math students are in school to figure out math and figure out themselves at the same time, all against a social and economic backdrop that has rarely been more oppressive to kids. If you think the answer here is “chatbots,” then I’m telling you we do not understand the question in the same way.

Chatbots are neat, but in K-12 math education, they are not much more than that.

Odds & Ends

We just kicked off the new season of my Math Teacher Lounge podcast with Bethany Lockhart Johnson. In this season, we’re tackling fluency—what it is, what it isn’t, and how to develop it. In the first episode with Jason Zimba, I confess to my own conflicted feelings about fluency and my hope that I’ll resolve that conflict during the season.

Another podcast I’m enjoying: Teach Me EdTech from Jess Stokes. The most recent episode discusses “Burnout-Proofing EdTech Products for Teachers,” in which edtech operators kind of admit that “even while we’re promising good stuff, we’re asking teachers for time and energy.”

OpenAI released multi-modal functions for ChatGPT. You can now talk to it and upload images. When asked recently what it would take for chatbots to become of actual transformative use in classrooms, I named ambient, multi-modal sensory as one of (a bunch) of prerequisites.

Ben Werdmuller compares generative AI to web3, which experienced a massive hype cycle like genAI and is now settling into its plateau of productivity. I find this pretty compelling.

There is a science of reading. Where is the science of math? Michael Pershan describes the differences between reading and math and why they likely necessitate a sciences of math. No one writes in this genre like Pershan.

I learned a lot from Sequoia Capital’s article on Generative AI’s Act Two, which admits what few actors will, that “wow we kinda let the discourse get away from ourselves back there.” They name user retention as a challenge going forward. Lots of people created lots of accounts on these generative AI platforms but people don’t use them as often as they use incumbent apps.

Education Week asked teachers to tell them “What are some examples of how technology is used poorly to teach math?” They offered 25 quotes, which are by no means comprehensive or statistically representative but I find myself parched for student and teacher perspectives on math edtech right now.

You made a good reads list on Daily Kos today for this one! 🙂https://www.dailykos.com/stories/2023/10/1/2196651/-Sunday-Good-Reads-for-October-1st-2023

I'm not sure how on-point this comment is here, but I had an experience with ChatGBT this weekend that I must share.

I was putting together a test for my 6th graders and wanted to have some examples of rectangular prisms that had the same volume, but different surface area, and then ones with the same surface area, but different volumes. I thought perhaps ChatGBT, which is bookmarked on my browser and never used, would be of great help.

So I put in my request for three such prisms (same SA, diff V), and CGBT came back with three prisms in which the number of the SA was EQUAL to the V. Not what I wanted, but amusing. I rephrased the question. I got different prisms, but the same thing SA=V. I tried rephrasing it several times, I filled out the feedback, all to no avail.

Aha! I realized I was still running Chat GBT v.3. Didn't version 4 come out six months ago (this is how often I use it). So I got into version 4, nothing changed. I asked, not for three example, but just two examples. Then I realized (embarrassingly late) that all I needed to do was ask for three prisms with the same SA, and say nothing about the volume, and I'd likely get what I wanted. So I did that, and when I looked at the result, what popped out to me was that one of these SA measures was impossibly small given the dimensions it provided. For the first time, I ran the dimensions by hand, and OMG, I kid you not, Chat GBT was miscalculating total surface area.

So I taught it the formula. In subsequent attempts, it always showed me the formula as it provided the answers, but it STILL got the wrong answer. No matter what I did, it kept getting it wrong, and it wasn't even the kind of mistake where I could say, "Oh, I see what you did there".

I wasted well over an hour, gave up, had a couple of examples of my own in about five minutes. 😑