Generative AI is Best at Something Teachers Need Least

Here's why customizing word problems isn’t generative AI’s killer app.

Whenever anyone lists the most promising uses of generative AI in a kid’s education, the odds are almost 1:1 they will describe its ability to personalize the text of a word problem to a student’s interests. For example, here is Peter Coy in the New York Times this week:

I’m optimistic, though, that artificial intelligence will turn (some) haters into fans. A.I. can custom-make lessons based on each student’s ability, learning style and even outside interests. For example, imagine teaching ratios by showing a Yankees fan how to update Aaron Judge’s batting average.

This idea is everywhere. I attended a convening of grant-funded edtech projects recently and nearly half of the grantee presentations featured a word problem customizer. MagicSchool includes one of them among its (I’m estimating quickly here) several hundred AI applications. So does Khanmigo.

Two things.

First, this doesn’t work very well.

I can find a study in Taiwan that is over 20 years old that found positive results for this kind of intervention. Other researchers have found “no significant increase in student achievement when the personalization treatment was used regardless of student reading ability or word problem type.” A more recent study found “personalization did not affect word problem performance, enjoyment or cognitive load.” A 2024 study found some positive effects for particular subgroups of students and then for others “significantly negative effects on their feelings of mathematics enjoyment.” At best, this seems like a very fragile intervention.

Here is why this doesn’t work very well.

Peter Coy asks us to “imagine teaching ratios by showing a Yankees fan how to update Aaron Judge’s batting average.” We don’t have to imagine it! Look what I made with GPT-4o.

On the left, you have a worksheet on teaching ratios using a general set of real-world examples. On the right, you have a worksheet teaching ratios personalized with examples from baseball!

Look carefully! Do you notice a certain sameness here? Do you sense that maybe these worksheets aren’t all that different after all?

The problem with word problems isn’t the words, it’s the work.

The words are personalized but the student’s work isn’t personal. Success on both worksheets looks like getting the same answer as everyone else. The student imprints very little of themselves on either worksheet. Consequently, the student feels wasted and unwanted in both.

There is another way.

You can ask every student the same question and it will feel personalized so long as students are able to offer something personal in their response, something of themselves, something that others recognize as valuable. A randomized controlled tweet:

This is it. It’s only an existence proof but it’s an evocative one.

People want to know and be known by one another. You can find in every kid a belief, sometimes harbored very secretly, that they have value to offer the world. When I observe teachers who invite that value from students, who name that value and name their students as valuable, it honestly looks like they’re using a cheat code. They’re playing with their class, not against their class, and everyone is running up the score. Students know what it feels like to have their education done to them rather than with them and they vastly prefer the latter. I will keep banging this drum until they put me in the ground.

As I watch the majority of the edtech industry take their new toy towards a desiccated version of personalization, part of me wants to yell at them “you’re going the wrong way!” and the other part of me just wants to let ‘em have their fun. Tech and business leaders have been driving their car into this same brick wall for decades now and I have never seen the roads clearer for people who want to use technology to support cognitive and social relationships between humans.

An easy way to not make it in edtech.

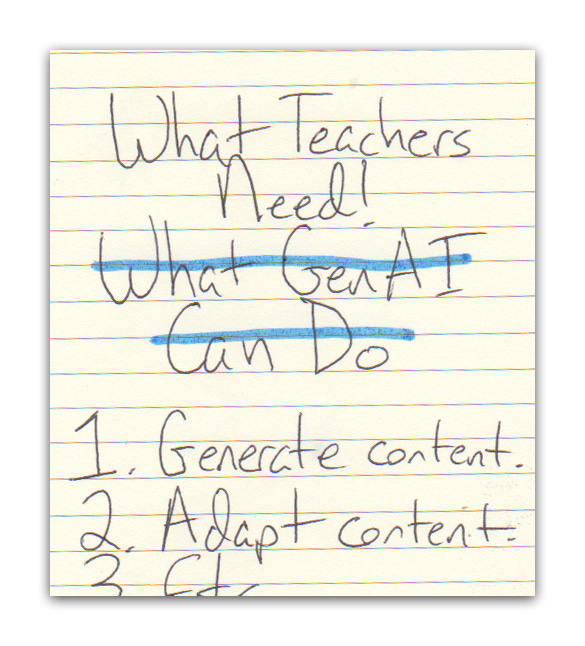

The larger issue here is that technologists are building towards generative AI’s strengths rather than teacher and learner needs.

On one path in edtech, you can write down the greatest needs of teachers and learners as you understand them. Then you can write down the top strengths of your technology as you understand it. And then you can figure out where your top strength meets their greatest need.

Right now, most edtech operators are on another path. They can name “content generation” and “content adaptation” as two of the top strengths of generative AI. They do not understand the needs of teachers and learners, however, so when it comes time to name them they …

Good luck and watch out for that brick wall.

Odds & Ends

¶ Two ways I have used GPT-4o this week. (1) I asked it to convert a group’s music preferences into an animal mascot. (2) I asked it to reconstruct a recipe given a description of something I enjoyed at a restaurant recently. Neat! 👍

¶ Sal Khan is on a press tour for his new book. (Thank you for your interest, but unfortunately I was not sent a review copy.) You’ve got MSNBC, CNBC, CBS, CNBC again and maybe others. I just want to name (for now) how surprised I am by the adversarial tone of the interviews. The interviewers press Khan on matters of cost, hallucination, the digitization of human relationships, plagiarism, etc. I would have expected business media to be friendlier terrain here honestly.

¶ Related: Khan Academy is making Khanmigo free for US teachers through a donation of compute from Microsoft. Nothing but good vibes here, honestly. If it’s useful teachers will use it and if it isn’t they won’t. I would encourage us to pack up exactly zero (0) of our other teacher support efforts, though.

¶ Still related: I’m very interested to see how many of the schools and districts purchasing generative AI products continue to renew those subscriptions after ESSER funds and philanthropic subsidies expire. For example: here is Newark Public Schools debating whether or not to renew their Khanmigo subscription. “The district is monitoring its implementation but has not said how Khanmigo is used in classrooms, what students and teachers think about the tool, or why there is a need for it in Newark.”

¶ People take it as pretty self-evident that generative AI will support special education teachers more than others, helping those teachers author individualized education plans and other paperwork. Yet in April 2024, Education Week found that 0% of principals and district leaders reported using generative AI extensively in their special education programs? Bonus survey: Pew Research finds that only 6% of teachers say there is generally “more benefit than harm” when it comes to using artificial intelligence tools such as ChatGPT in K-12 education.

¶ I don’t know how to score this idea but it interests me. A teacher is trying to disincentive LLM plagiarism in her students’ forum posts so she asks students to rate the posts specifically for their humanity. Does it read like a human wrote this? Does it have a distinctive voice or tone? Or does it sound like you shoved the entire internet into a Vitamix? Interesting alignment of incentives towards originality.

¶ I read the big generative AI in education paper from Google so you don’t have to. The very-interesting: how to benchmark an LLM for tutor quality. Humans don’t agree! And what they agree on is hard to measure! The not-at-all-interesting-to-me: their efforts at tuning their vanilla LLM (Gemini) to become a better tutor (LearnLM-Tutor). This is like asking, “Who can run the 100 meter dash faster—a Roomba or a customized Roomba?” all while Usain Bolt is standing there in lane three.

¶ Paper has had a tough year. (The company, not milled trees.) After seeing lots of interest in their tutoring product during the pandemic and immediately after, large customers canceled their contracts. Paper has announced product changes now and this sentence seems like one to underline for anyone working in tutoring—digital or otherwise. “It became increasingly clear that while we excelled in supporting self-motivated learners, a critical gap remained in addressing the needs of students requiring more structured support.”

¶ Grantmakers for Education has posted my panel discussion on “The Future of Math: Innovations in Classroom Technology.” We got through maybe half of the pre-planned questions and no one cared. Great group and discussion.

¶ Expert math educator Dylan Kane reviews last week’s GPT-4o math tutoring video. “There's a conflict in moments like this between helping a student solve a problem and helping a student learn something”

I went back and found this post to forward to a friend.

"Students know what it feels like to have their education done to them rather than with them and they vastly prefer the latter. "

Re: the Future of Math conversation -- I'm really chewing on your point about the software engineering background of so many education philanthropists, and how that background can invite the illusion that the right curriculum is like source code that can be compiled and run without depending on all of the specific people in the room.