How We Built Discussion Moments

And how we decided NOT to build it.

In last week’s newsletter, I described Discussion Moments, some new technology we designed at Amplify to make whole-class discussions easier and more productive for teachers and students. I’ve been working on Discussion Moments for over a year, which I realize is a very long time in the vibe-coding era. I can imagine some of you are wondering why we didn’t just type “make something useful for teachers” into Claude Code or Clawdbot or whatever and let the agents swarm it out over lunch.

We took a different approach and I wanted to share a few notes about how my team did and didn’t work on this feature here at Amplify, because I’m proud of us.

Start with the problem not the solution.

Early 2023 was a time of profound foolishness in edtech. So many people started with the Large Language Model as the solution and worked backwards to define a problem. These people would hold a microphone to their face, making their voice louder on purpose, and then say things like:

Hallucinations are good, actually. Fact-checking a computer is a game that kids and teachers have always wanted to play.

It’s good for kids to talk to chatbots impersonating historical and literary figures. This is an ideal way to teach history and literature.

It’s good for kids to ask chatbots to help them with a first draft of their essay. Ideally, we would not ask students to do this work of checking their understanding and organizing their thinking.

This was all untrue, of course. Experts in learning and history and writing were emphatic that these uses of an LLM would inhibit knowledge formation, reproduce biases, and undermine critical thought. But when all you have is a Large Language Model, you start to see the problems of learning as Large Language Model-shaped.

Meanwhile, I spent early 2023 basically the same way I spend every year: trying to understand the problems of teaching and learning, talking to teachers, substitute teaching monthly, working in classroom weekly, binging classroom video, and interviewing our front-line teacher coaches. This was slow work, but it helped me better understand a real, rather than imagined, problem of teaching and learning: facilitating whole-group discussions.

Make a solution by hand.

I started playing with the idea that teachers had too much information about their students, that all of that information was a blessing but also a burden. What kind of resource would help teachers make good use of that information?

At this point, lots of companies were willing to give teachers ten pages of analysis about their students, applying clustering methods from Natural Language Processing, drowning teachers in slightly less information but still drowning them, leaving them without an obvious “So what?”

I plucked ten classes out of our database at random, classes that had just finished one of our assessments. Then I looked at student thinking in those classes and asked myself, “If I were this teacher’s assistant, how would I help them make the best use of five minutes tomorrow?” I tried to assume this teacher was operating on maximum stress and minimum extra time.

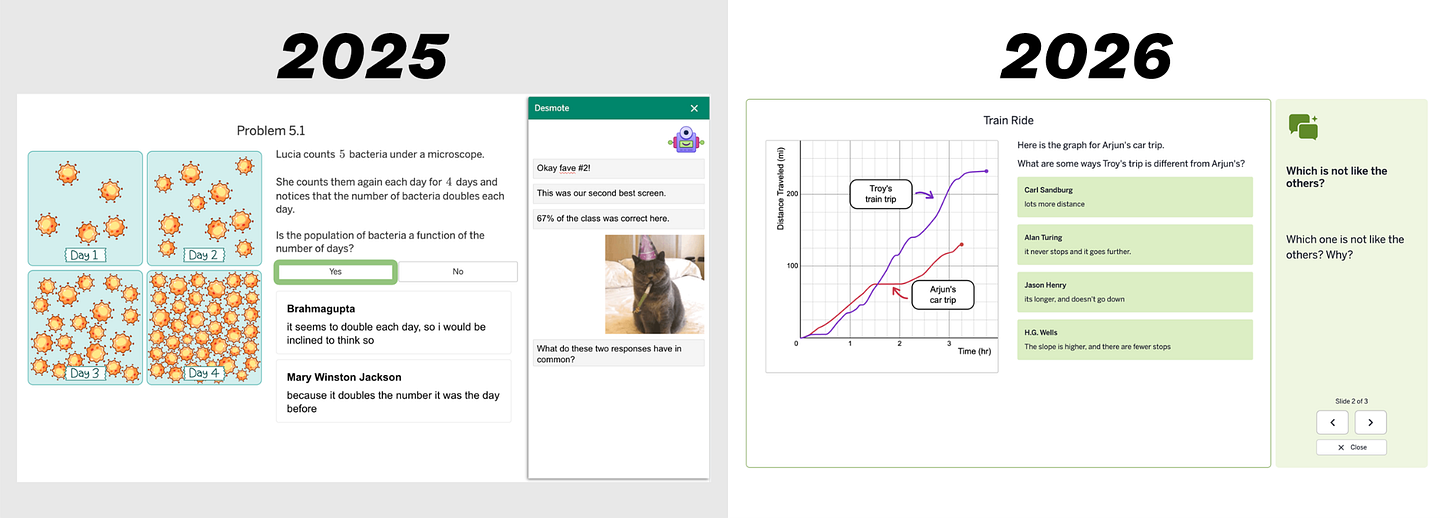

Then I prototyped the first Discussion Moment in Google Slides.

You can see the similarities. Several student responses. A question to frame them. A narrative on the side. All made with love and by hand. Then I emailed it out to those random teachers.

I just made this slideshow for you based on your class’s work on the 8.5 end unit assessment. I’m curious if you think it’d be useful for you. If you have any thoughts I’d love to know.

I would have considered a single negative response or a bunch of non-responses enough reason to scrap the prototype. Instead, the responses from these strangers were pretty effusive.

I appreciate you sharing this with me. I love it!!! I really appreciate how it summed up the responses in my class and then gave me follow up questions for them. Can I share these with my class as I would love to have discussions based around the prompts given?

Yes, yes you may!

All of that investigatory work is slow. But I needed to know: is this thing even the right shape? Is this something teachers even want to hold in their hands? Does it fit their grip? Are we asking them to do something unnatural? Are we taking them off their “desire path”? Are we working for or against their aspirations for their work? That work can feel quite slow, until you consider the cost of making the wrong thing.

Now try to automate & systematize.

We asked ourselves, “What could we do if we only had seconds of turnaround time rather than hours? Which parts of that manual process could we pass off to a machine and at what cost to quality?”

Constructing the discussion slide? Advantage: computers. A computer can populate a slide faster than a human and without loss of quality.

Deciding how to construct the discussion slide? Advantage: humans. Computers could make some fine guesses here, but this work is largely a matter of taste, and we hire people with great taste. Asking humans to do that work has required a non-trivial but one-time investment across a countable number of activities in our curriculum.

Picking responses from a class that match a given search criteria? Advantage: computers. We tested LLMs here and found their accuracy quite high, provided they were taken quite close to the target by a human.

We turned this division of labor into a set of authoring interfaces for humans and a set of API calls for an LLM.

Measure big & small & qual & quant.

We’re running a pilot with 200 teachers. That includes a pre- and post-survey. Our user experience team will conduct interviews afterwards. And we also spent a lot of time setting up our digital eventing and logging system, so during the pilot we’re able to answer questions like:

Is this particular Discussion Moment activating as often as we’d want?

Are teachers opening it?

Were the right responses selected?

And then respond to those answers daily with product changes.

Patience is product.

It is so much easier to do this slow work when you have patient company leadership. Several months after ChatGPT made its splash, our competitors rushed to market with the exact same set of features. Chatbots. Autograders. Practice set generators. Lesson shorteners. Etc. Not bad ideas, but definitely the same ideas, not one of which, to my knowledge, has ever cost us a sale. (Seriously, I could count on zero hands the number of teachers who have asked me why we don’t have a lesson shortener.)

In spite of the massive pressure from the tech sector to appear forward-thinking, to do something, our board and leadership gave our product team plenty of time to make our thing, to work slowly and carefully, to make a marriage of technology and teaching that is uniquely Amplify’s.

Mailbag

Dylan Kane is currently on a No Tech January which makes his kind feedback on our tech a little extra special:

I’m impressed with the feature. I was skeptical when Dan alluded to working on something with LLMs a while back, but this is a solid use case and I think will add some solid value for users of the Desmos curriculum. Great work from the Desmos team.

Love this approach. The decision to prototype in Google Slides before building anything automated is brilliant but so rare nowadays.I've seen too many product teams (including ones I worked with) skip that validation step and automate the wrong thing entirely. The LLM hype in 2023 really did push everyone toward solution-first thinking insted of problem-first. That patience from leadership also matters way more than people realize.

The power of human thinking and examining evidence. Thanks Dan et al.